Research Projects

ZEro-Shot Coreset Selection via Iterative Subspace Sampling

Zero-Shot Coreset Selection (ZCore) is a method of coreset selection for unlabeled data. Deep learning methods rely on massive data, resulting in substantial costs for storage, annotation, and model training. Coreset selection aims to select a subset of the data to train models with lower cost while ideally performing on par with the full data training. To maximize performance, current state-of-the-art coreset methods select data using dataset-specific ground truth labels and training. However, these methodological requirements prevent selection at scale on real-world, unlabeled data. As a solution, ZCore addresses the problem of coreset selection without labels or training on candidate data. Instead, ZCore uses existing foundation models to generate a zero-shot embedding space for unlabeled data, then quantifies the relative importance of each example based on overall coverage and redundancy within the embedding distribution. On ImageNet, the ZCore coreset achieves a higher accuracy than previous label-based coresets at a 90% prune rate, while removing annotation requirements for 1.15 million images.

Visualizing Model Certainty in the Unknown

An Efficient Technique to Quickly Understand Visual AI Model Performance on Unlabeled Data at Scale

How to Tame Your (Data) Dragon

Zero-Shot Data Reduction Techniques for Efficient Robotics & Visual AI Development

Mobile Robot Manipulation using Pure Object Detection

This work addresses the problem of mobile robot manipulation using object detection. Our approach uses detection and control as complimentary functions that learn from real-world interactions. We develop an end-to-end manipulation method based solely on detection and introduce Task-focused Few-shot Object Detection (TFOD) to learn new objects and settings. Our robot collects its own training data and automatically determines when to retrain detection to improve performance across various subtasks (e.g., grasping). Notably, detection training is low-cost, and our robot learns to manipulate new objects using as few as four clicks of annotation. In physical experiments, our robot learns visual control from a single click of annotation and a novel update formulation, manipulates new objects in clutter and other mobile settings, and achieves state-of-the-art results on an existing visual servo control and depth estimation benchmark. Finally, we develop a TFOD Benchmark to support future object detection research for robotics.

Learning Object Depth from Motion and Segmentation

This work addresses the problem of learning to estimate the depth of segmented objects given some measurement of camera motion (e.g., from robot kinematics or vehicle odometry). We achieve this by, first, designing a deep network that estimates the depth of objects using only segmentation masks and camera movement and, second, introducing the Object Depth via Motion and Segmentation Dataset (ODMS). ODMS is the first dataset for segmentation-based depth estimation and enables learning-based algorithms to be trained in this new problem space. ODMS training data are extensible and configurable, with each example consisting of a series of object segmentation masks, camera movement distances, and ground truth object depth. As a benchmark evaluation, ODMS has four validation and test sets with 15,650 examples in multiple domains, including robotics and driving. Using an ODMS-trained network, we estimate object depth in real-time robot grasping experiments, demonstrating how ODMS is a viable tool for 3D perception from a single RGB camera.

Depth from Motion and Detection

(New) Detection-based dataset and methods with better depth estimation results, greater motion flexibility, and more application areas.

Bubblenets: learning to select the guidance frame in video

Semi-supervised video object segmentation has made significant progress on real and challenging videos in recent years. The current paradigm for segmentation methods and benchmark datasets is to segment objects in video provided a single annotation in the first frame. However, we find that segmentation performance across the entire video varies dramatically when selecting an alternative frame for annotation. This work addresses the problem of learning to suggest the single best frame across the video for user annotation—this is, in fact, never the first frame of video. We achieve this by introducing BubbleNets, a novel deep sorting network that learns to select frames using a performance-based loss function that enables the conversion of expansive amounts of training examples from already existing datasets. Using BubbleNets, we are able to achieve an 11% relative improvement in segmentation performance on the DAVIS benchmark without any changes to the underlying method of segmentation. This work was a finalist for Best Paper Award at CVPR.

Michigan engineer article →

Video object segmentation-based visual servo control

To be useful in everyday environments, robots must be able to identify and locate unstructured, real-world objects. In recent years, video object segmentation has made significant progress on densely separating such objects from background in real and challenging videos. This work addresses the problem of identifying generic objects and locating them in 3D from a mobile robot platform equipped with an RGB camera. We achieve this by introducing a video object segmentation-based approach to visual servo control and active perception. We validate our approach in experiments using an HSR platform, which subsequently identifies, locates, and grasps objects from the YCB object dataset. We also develop a new Hadamard-Broyden update formulation, which enables HSR to automatically learn the relationship between actuators and visual features without any camera calibration. Using a variety of learned actuator-camera configurations, HSR also tracks people and other dynamic articulated objects.

strictly unsupervised VIDEO OBJECT SEGMENTATION

We investigate the problem of strictly unsupervised video object segmentation, i.e., the separation of a primary object from background in video without a user-provided object mask or any training on an annotated dataset. We find foreground objects in low-level vision data using a John Tukey-inspired measure of “outlierness.” This Tukey-inspired measure also estimates the reliability of each data source as video characteristics change (e.g., a camera starts moving). The proposed method achieves state-of-the-art results for strictly unsupervised video object segmentation on the challenging DAVIS dataset. Finally, we use a variant of the Tukey-inspired measure to combine the output of multiple segmentation methods, including those using supervision during training, runtime, or both. This collectively more robust method of segmentation improves the Jaccard measure of its constituent methods by as much as 28%. This research is performed in collaboration with Professor Jason Corso.

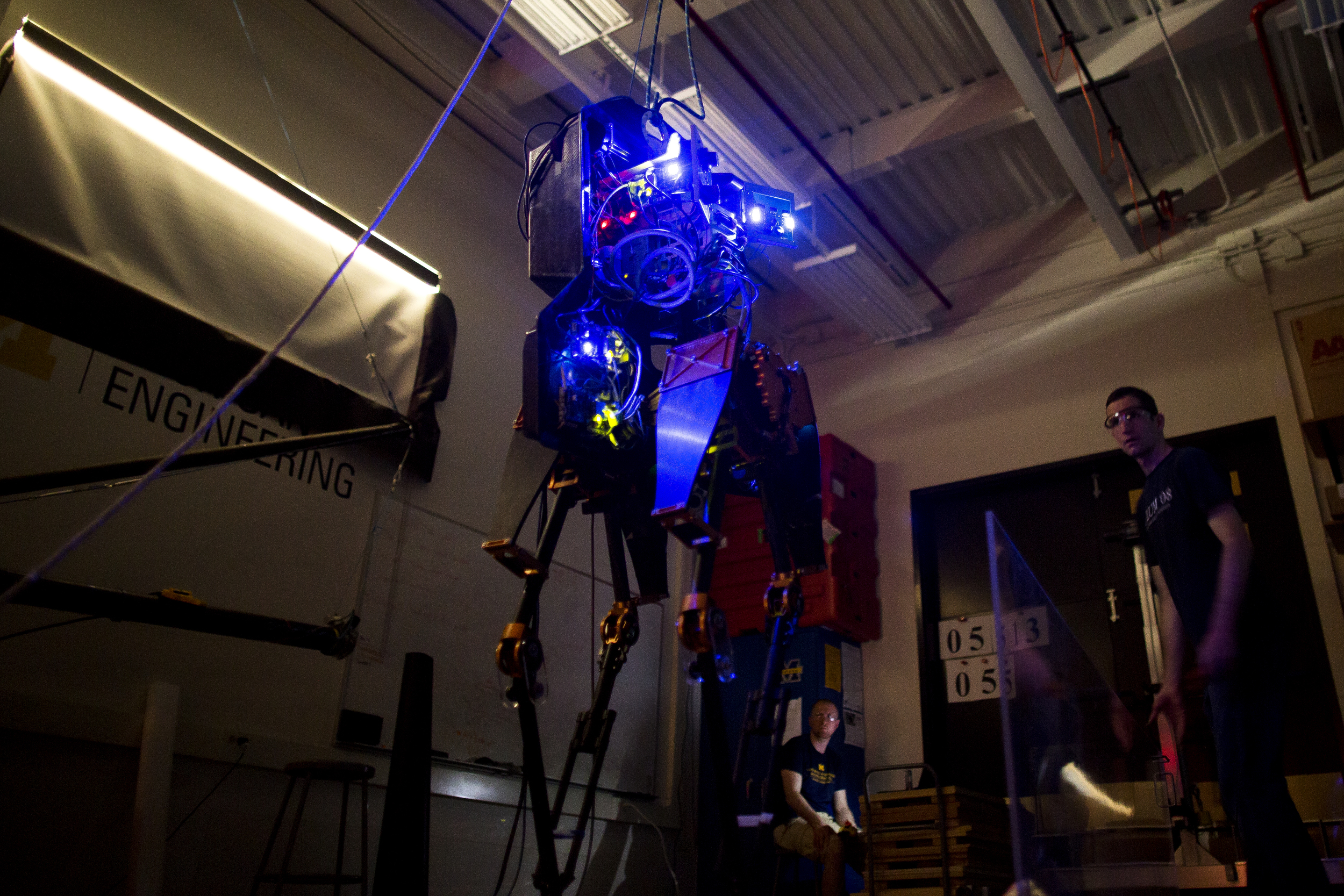

Robust robot walking control

Bipedal locomotion is well suited for mobile robotics because it promises to allow robots to traverse difficult terrain and work effectively in man-made environments. Despite this inherent advantage, however, no existing bipedal robot achieves human-level performance in multiple environments. A key challenge in robotic bipedal locomotion is the design of feedback controllers that function well in the presence of uncertainty, in both the robot and its environment. To achieve such walking, we design feedback controllers and periodic gaits that function well in the presence of modest terrain variation, without reliance on perception or a priori knowledge of the environment. Model-based design methods are introduced and subsequently validated in simulation and experiment on MARLO, an underactuated three-dimensional bipedal robot that is roughly human size and has six actuators and thirteen degrees of freedom. Innovations include virtual nonholonomic constraints that enable continuous velocity-based posture regulation and an optimization method that accounts for multiple types of disturbances and more heavily penalizes deviations that persist during critical stages of walking. Using a single continuously-defined controller taken directly from optimization, MARLO traverses sloped sidewalks and parking lots, terrain covered with randomly thrown boards, and grass fields, all while maintaining average walking speeds between 0.9-0.98 m/s and setting a new precedent for walking efficiency in realistic environments. This research is performed in collaboration with Professor Jessy Grizzle.

Popular Science article →

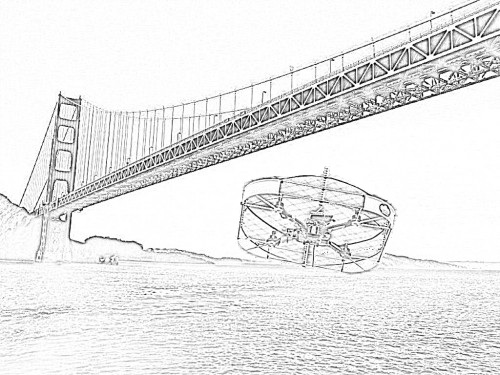

Wireless Power Transfer to Ground Sensors from a UAV

Wireless magnetic resonant power transfer is an emerging technology that has many advantages over other wireless power transfer methods due to its safety, lack of interference, and efficiency at medium ranges. We develop a wireless magnetic resonant power transfer system that enables unmanned aerial vehicles (UAVs) to provide power to, and recharge batteries of, wireless sensors and other electronics far removed from the electric grid. We address the difficulties of implementing and outfitting this system on a UAV with limited payload capabilities and develop a controller that maximizes the received power as the UAV moves into and out of range. We experimentally demonstrate the prototype wireless power transfer system by using a UAV to transfer nearly 5W of power to a ground sensor. Motivated by limitations of manual piloting, steps are taken toward autonomous navigation to locate receivers and maintain more stable power transfer. Novel sensors are created to measure high frequency alternating magnetic fields, and data from experiments with these sensors illustrate how they can be used for locating nodes receiving power and optimizing power transfer. This research is performed in collaboration with Professor Carrick Detweiler.